Accelerate business outcomes with rapid data validation

Aug. 25, 2021 | By Naveen Kumar Madhamshetty

The digital economy is reinventing itself every hour, demanding next-gen strategy and operations: automation, DevOps, Agile. From automating manual processes or moving data centers and large applications to the cloud, now more than ever, organizations are looking toward being digitally enabled—which inherently means being data-enabled.

For data modernization initiatives, ingesting data from multiple data sources requires data consistency, accuracy, integrity and completeness. But many developers still manually validate data before consuming it in downstream layers, which is time-consuming and increases the risk of inaccurate or flawed data.

Examples of common data quality issues are:

- Special characters in data that cause row/column shifting

- Data mismatches that lead to failures in production

- Missing values that lead to failures that require non-null values

- Aggregations of incorrect data that lead to poor business decisions

- Increased costs and missed opportunities

To avoid these issues, a robust data validation framework, such as the test automation framework (TAF), is needed to process the data and ensure it’s accurate for consumption.

What is test automation framework (TAF)?

Test automation framework is an automated approach to data quality, validation and comparison specifically designed for the end-to-end test orchestration of data modernization project life cycles. Built on Apache Spark/Python, TAF can scale with large datasets that typically live in a distributed system, data warehouse or data lake.

TAF encourages users to focus on how their data should look instead of worrying about how to implement actual data validation checks. That’s why this framework offers a declarative approach that allows users to define data quality checks on their data by composing a huge variety of prebuilt validations. Through a provided metadata-driven framework, users should be able to define the data validation rules, validate the data against defined business rules, customize the flows and verify data quality on the fly.

Ultimately, data validation systems should seamlessly scale to large datasets. To address this requirement, TAF is designed to translate the required data metrics computations to summary queries, which can be efficiently executed at scale with a distributed execution engine.

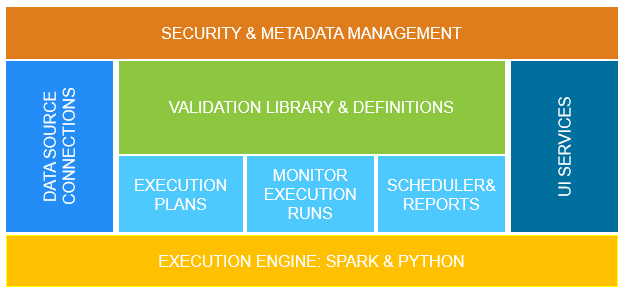

What are the architecture components of the test automation framework?

TAF includes a set of components that enables the execution of tests and reporting of their results. The main components include:

- Validation library: TAF has prebuilt validations, row counts, duplicate checks, cell-to-cell comparison, column validations, data types, minimum and maximums, correlations and more.

- Execution engines: TAF uses Python or Spark as execution engines to read data sources and compute validations through an optimized set of aggregation queries.

- Test definitions: To define a set of data quality checks to verify the data, TAF takes care of deriving the required set of validations to be computed.

- Execution plans: TAF defines groups of test validations together to run as a logical plan—just define once and you can run it multiple times.

- Monitoring execution history: TAF allows you to monitor the execution history of data validations performed on the dataset.

- Reports: TAF generates a data validation report that contains the result of the execution plans.

- Scheduler: TAF can run the execution plans at regular intervals without manual intervention.

- UI service: TAF incorporates user-friendly interfaces to define test validations, create test plans, and schedule and monitor the entire data validation life cycle.

Test automation framework process flow

TAF framework supports the verification of data validation at scale, which meets the requirements across several projects, including initiatives aligned to data modernization, data migration, machine learning, data profiling and data standardization.

For the best approach, the steps of the automation testing process can be broken down into the following:

- Set up the connection pool for data sources and validate the connections.

- Define the validation rules to apply against the data pipelines and select the data flow engine (Python or Spark).

- Configure the execution plans for the set of validation checks to be performed on the dataset.

- Monitor, schedule and report the validation results.

Improve data quality automation to support productivity gains

Data quality is powering how businesses make decisions and is imperative for success in today’s data-driven world. TAF can be leveraged for end-to-end data validation, standardization and profiling to automate the configuration of data quality checks. From improving organizational efficiency and productivity to reducing test life cycle times by 30%, as well as reducing risks and costs associated with poor quality data, TAF guidelines can help transform your automation processes. TAF has been tested extensively at multiple client engagements for verifying the quality of many large production datasets and can help you automate your data quality management pipelines.