GCP AI Platform Notebooks: Establishing a machine learning trained model

Step-by-step instructions on how to set up Google Cloud’s AI Platform Notebooks

July 17, 2020 | By: Satyartha Poppasani

For most organizations, two of the key factors measured for success are continuous innovation and speed to market. In today’s world, most of that continuous innovation is being driven by the ability to build artificially intelligent machines using machine learning (ML). In addition is the need to empower teams to enable reusability and collaboration—fueling speed to market.

Yet, the biggest challenge in empowering end users stems from the fact that a data scientist’s first step to build machine learning models is in itself challenging—requiring setting up a development environment by installing all the necessary packages, libraries and CUDA packages to run code on graphics processing units (GPUs).

This task is laborious and often error-prone, causing package inconsistencies that can exasperate model development. Even if one gets through the initial pain, they start realizing that they are set up as individuals working in silos, seldom able to easily leverage their team members’ work.

Leveraging GCP’s AI Platform Notebooks to create ML frameworks instantly

Google Cloud Platform's (GCP) AI platform helps alleviate these two critical issues through an enterprise-grade AI platform called AI Platform Notebooks. The quintessential tool for machine learning engineers and data scientists is JupyterLab, a web-based interactive development environment for Jupyter Notebooks. Luckily, AI Platform Notebooks is a fully managed service that offers a JupyterLab environment with prebuilt R, Python, machine learning, deep learning packages with optional GPUs that can be spun up in minutes.

In this step-by-step article, you’ll learn how to set up AI Platform Notebooks and establish a simple machine learning model on the popular UCI Census dataset. We’ll also walk through the process of reading this dataset into a pandas data frame, prepare the dataset ready for machine learning training and split it into a machine learning model before evaluation.

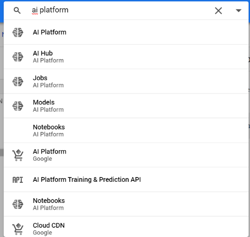

1. The first step is to set up a notebook on AI Platform. The easiest way is to head to console.cloud.google.com and search for “AI Platform.” Click on AI Platform.

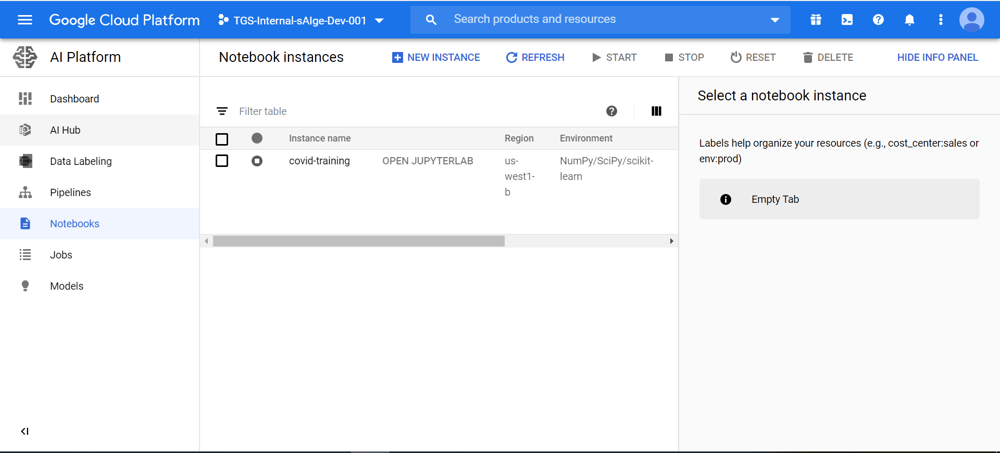

2. Click on Notebooks in the left panel.

3. Click on NEW INSTANCE at the top to spin up a new instance.

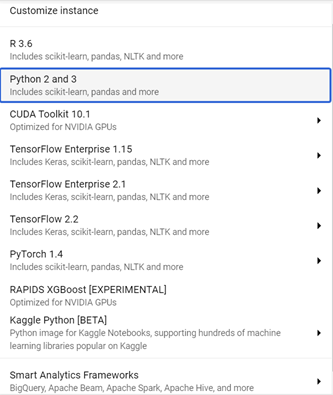

4. Google Cloud offers a number of configurations to set up a JupyterLab. You can choose between their offerings or customize the setup completely.

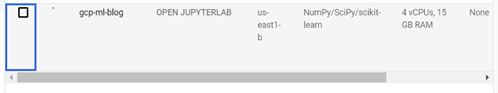

5. For this example, we will use the Python 2 and 3 instance type. Name the instance “gcp-ml-blog,” accept the defaults, and click on Create.

6. Once the instance is created, click on OPEN JUPYTERLAB to get to the web-based JupyterLab instance.

Getting started

For this example, we are using the popular machine learning dataset from UCI. Since this dataset is very old, it must be manually converted into the comma-separated values (CSV) format. For our purposes, this step has already been performed. The CSV file can be directly read from this link.

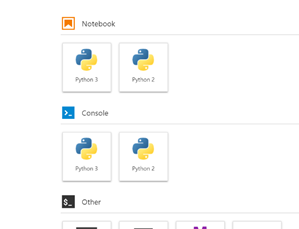

1. While on JupyterLab under Notebook, click on Python 3.

2. This will bring up a Python 3 Jupyter notebook. Save the file as “gcp-ml-blog.ipynb.”

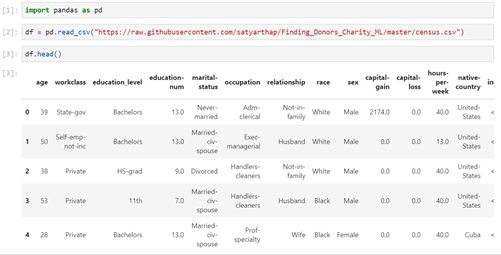

3. Import the pandas library and read the file directly from the link provided as follows.

4. For convenience, the CSV file has been read directly into a data frame. The most common way to move data into Google Cloud is using Cloud Storage.

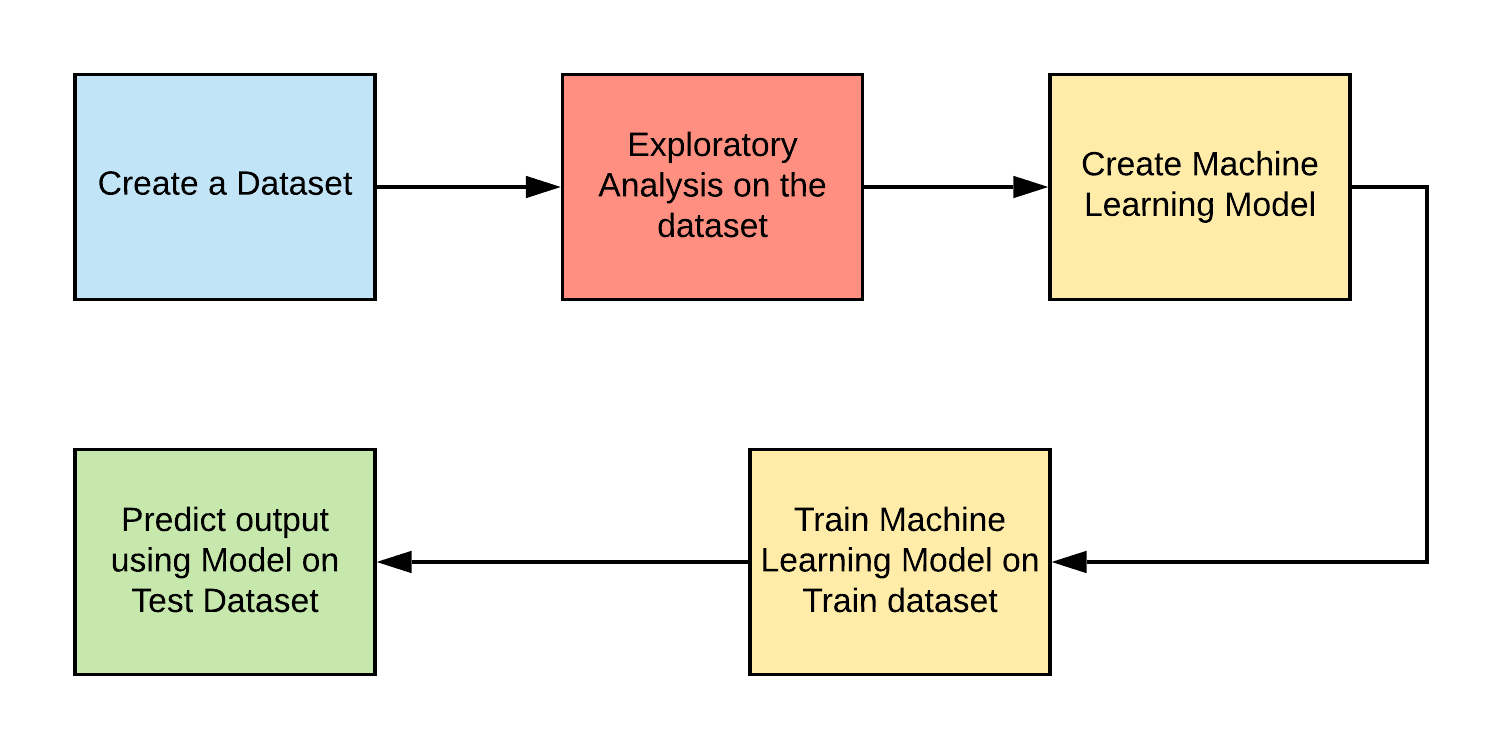

For all intents and purposes, this article skips through exploratory analysis of the features and feature engineering and continues on to building a machine learning model.

Establishing the goal

To get us through the learning steps, we will build a model to predict whether a person earns income greater than $50,000 or less than $50,000 given various details about that person. As seen in the data frame, different attributes about the person have been provided such as age, level of education, marital status, occupation, etc. These attributes help us understand whether a person earns an income greater than or less than $50,000. They are called features or independent variables. The income that is to be predicted is called the dependent variable.

Preparing the Dataset

A few of the feature variables are categorical variables—they are chosen from a list of values such as sex (male or female). Such categorical variables need to be numericized before they can be fed into the model for training. This is done by creating dummy variables.

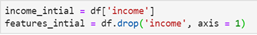

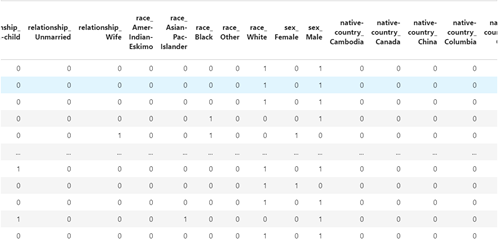

1. In machine learning, it is common practice to use X to denote features and Y to denote the dependent variable. X and Y need to be assigned using the data frame.

2. A few of our features are categorical variables, so we’ll need to create dummy values for them. The income column is in a string denoting “<=50K” or “50>K.” This should be mapped to numerals for training.

3. Feature variables are transformed after converting them into dummy variables.

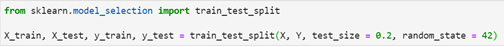

4. X and Y are now ready for training. They should be split into train and test sets. This example uses the prominent Scikit-learn (sklearn) ML package. Sklearn allows for easy splitting of data frames using its train test split function.

Eighty percent of the values are used for training and 20% are kept for testing the model using the test_size argument.

5. A random state is used so that the same random values are split into test and train for any user.

Machine learning model training and evaluation

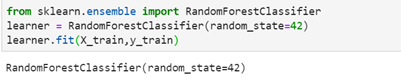

The data is now ready to be trained. A random forest classifier is trained on the dataset.

1. It must first be imported from the sklearn package. A random forest classifier is created and assigned to the learner variable. It is fit using the training data X_train, y_train.

2. The random forest classifier is trained and ready for predictions.

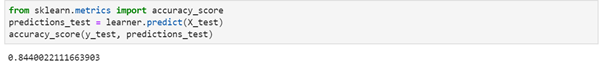

3. Accuracy of the current model can be predicted using the accuracy_score package from sklearn

In the end, this model achieved an accuracy of 84.40%

4. Save the notebook and shut down the instance.

Conclusion overview

By the end of this exercise, you should have learned:

- How to create a GCP AI Platform Notebooks instance

- How to create a pandas data frame using a CSV file

- How to create a random forest machine learning classifier and train it on the training dataset

- How the model was evaluated on the testing set to achieve an accuracy of 84.40%

“Every company is a data company,” so they say. But it’s the value this data can offer that lends itself to the bigger story—an intelligent story.

Google Cloud’s AI Platform Notebooks is a powerful and easy tool to get started on building powerful machine learning and deep learning models. Discover more with Google’s Qwiklabs labs that offer a step-by-step guide to learn Google Cloud Platform. Learn how to build a more robust model on the census dataset using neural nets in this Qwiklab.

Images in this article were taken from Google Cloud Platform AI Platform Notebooks.

Satyartha Poppasani is a principal engineer for TEKsystems, with expertise leading data science, machine learning and AI.