Enterprise data analytics moves to the future

May 31, 2018 | By Shishir Shrivastava

Enterprises have been mining data for centuries, starting with handwritten book keeping ledgers, but data management really took off with the advent of the database management systems (DBMS) in the late 1970s. Corporations started stockpiling loads of data into the enterprise application system, and database software became the backbone. Enter vendors like Oracle, IBM, DB2, Teradata, MS SQL Server.

The operational systems grew rapidly, along with the data gathered. Companies wanted to use the data to help executives to make decisions quickly and effectively, but the systems weren’t efficient in providing analytical reports fast enough to make a real difference.

Data warehousing drivers

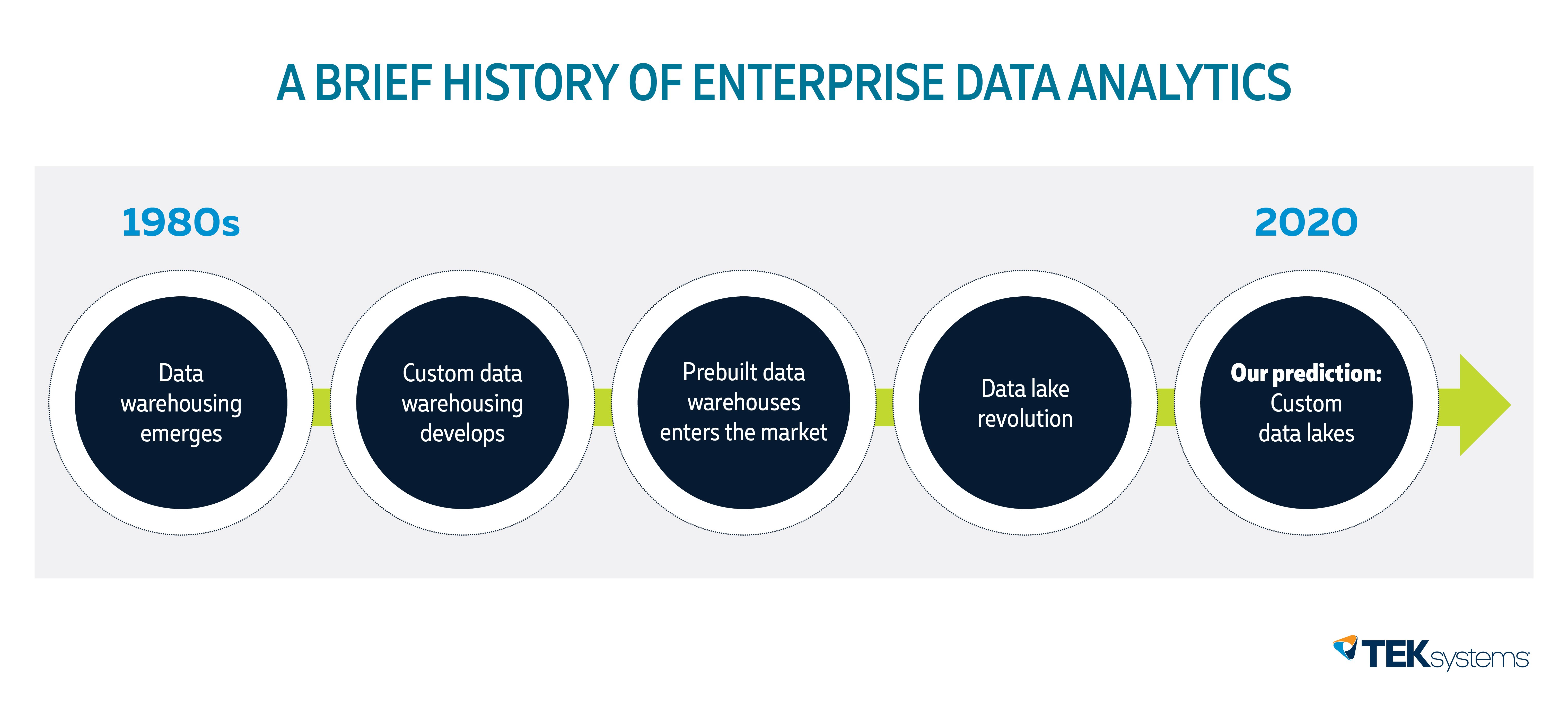

In the late 1980s, data warehousing emerged, brewing along with online transaction processing systems.

What is a data warehouse and why is it important? Simply put, the definition of a data warehouse is it’s an aggregate of data and information from multiple systems or sources. It’s designed so that enterprises storing the data in relational databases can use it for decision making.

After it was developed, top management in big enterprises wanted to jump on the bandwagon. There were many key factors driving the enterprises towards data warehousing, including:

- Increasing complexity of an operational DBMS

- Sluggish delivery of analytical reporting

- Expensive analytical reporting from operational systems

- Analytical reporting was resource-intensive on operational data stores

- High cost of maintenance

The data warehouse outline

This paradigm of concocting data in enterprises brought a new field of study, data warehousing. The essence of this framework is pulling the data from source systems and offloading into another relational database called a data warehouse.

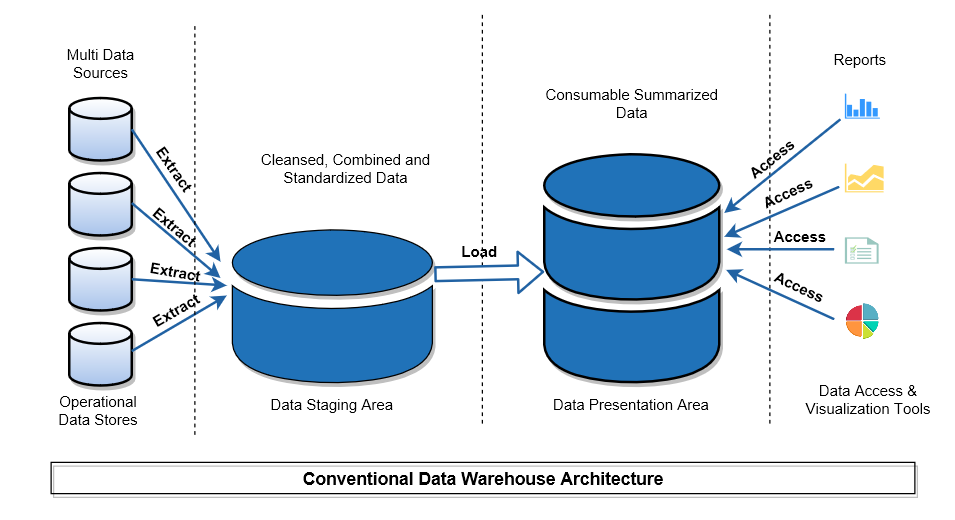

Diagram: Conventional data warehouse architecture

In this model, data is absorbed from various relational operational database systems and placed in a staging area. Once the data is brought in from the staging area, it’s processed, refined and transformed to suit the reporting needs. This cleansed data is then deployed in different data marts in order to streamline analytical reporting.

Custom data warehouses

This is a typical data warehousing architectural setup in most enterprises, which process their internally generated data via operational data stores. Since every industry, from finance to energy, has unique needs, the processing frameworks and paradigms are also unique. One product or solution that fits all sizes isn’t possible. Custom data warehouse provided that much-needed analytical solution not just to top executives but also the department/cost-center heads. Decision-making became faster due to readily available preprocessed data from the operational systems. This technique not only resolved the complexities involved in providing analytical reports but also reduced the duration of delivery. The precooked data significantly reduced design and development efforts of analytical reports. This also settled the issues related to resource intensive analytical reports emanating out of operational data stores. Report rendering is now rapid.

As enterprises have now started demanding more from data, new fields like predictive analytics and business intelligence have started to germinate. Smaller enterprises have also begun investing in building their own custom data warehouses, but early adoption has given many advantages to the enterprises.

Prebuilt data warehouses

Amidst growth in database software, enterprise applications development were also maturing, and new ERP software came along: JD Edwards, SAP, Oracle Applications, PeopleSoft, Siebel and more. Meanwhile, building enterprise specific data warehousing solution had its own challenges:

- Customization for each enterprise DW solution is time-consuming

- Designing a large warehouse is resource-intensive

- Skilled resources are needed to completely understand the source system

- Upgrading to the source system caused ripple effects on the data warehouse side

- High costs

Since ERPs have definitive processes and standards, it became popular to design prebuilt data warehouse framework on top of the standard ERP applications instead of designing custom warehouse for individual enterprises. These prebuilt warehouses have become favored choices because of low cost of ownership, faster deployment and little need for customization.

Data lake revolution

As companies become more customer-centric, they seek to better understand customers create better user experience. This has necessitated capturing data from outside the organization through various channels, like email, web, text messages, social media, audio, video. And it’s a huge volume of data that’s not just structured in relational formats, but also unstructured. This is putting lots of pressure on the data warehousing model, both in storage and analysis needs.

Processing varied forms of data and in humongous size has uncovered limitations in traditional data warehousing:

- Processing homogeneous forms of data residing in relational databases

- Large volume batch processing, usually overnight

- Unavailability of real-time analytical reports

- The high cost of managing voluminous data in warehouses

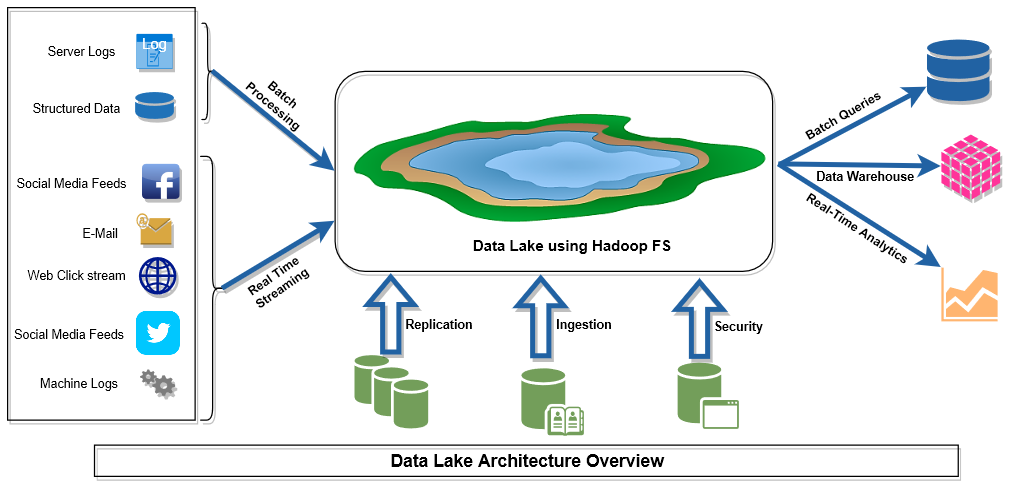

These limitations on data warehouses compelled the industry to look for a better solution. With the onset of the technology revolution around massive parallel processing (MPP), it’s becoming faster to crunch enormous data. Unlike conventional data warehousing, the approach towards capturing data has changed in modern day data analytics. Organizations now focus more on mining data using parallel processing techniques in its native form and using state-of-the-art open source tools to pull the data and provide analytical reporting. This whole setup is popularly known as “data lake,” a term coined by James Dixon, the founder of Pentaho BI tools.

Diagram: Data lake architecture overview

What are the benefits of data lakes?

- Ability to handle structured and unstructured data

- Extreme speed in clustering data

- Democratization of data

- Real-time analytical reporting

- Scalability

- Low cost of ownership

Open source technology has given huge impetus to the research and innovation in this space. There are abundant open source tools available in the market to perform analysis of data on a large scale.

What are tools for managing the data lake ecosystem?

- Cloud infrastructure (like AWS, Azure, Google), is a key component of a data lake and has made data availability ubiquitous

- Apache Hadoop is an open source file system to handle data ingestion in the lake

- Apache HBase is an open source distributed columnar database

- Apache Hive is an open source data warehousing tool for query, summarization and analysis

- Apache Pig executes Hadoop jobs for ETL processing

- Apache Spark is a cluster computing framework used for processing large chunk of data

- Talend is a data integration and quality tool

- Analytical reporting tools like Tableau, Power BI and Oracle OBIEE

I have firsthand experience working closely with a manufacturing customer who is implementing a data lake to streamline data management. It’s a large organization with multiple enterprise applications, each serving respective business units, and with multiple locations across the globe. They had isolated data warehouses for each enterprise application to get the analytical reports for business insights. Clearly, the holistic picture of data and direction for the entire organization was missing. They revamped the data strategy and implemented a data lake to enable unified enterprise-level BI reporting. This also made it possible to pull external non-organizational data to get a better perception of customer sentiment.

Happy with the success of the data lake investment, the customer’s IT organization is exploring various options to streamline real-time reporting.

Our engineering team at TEKsystems’ research and innovation lab has been working with variety of tools and putting together a state-of-the-art, low total cost-of-ownership and modern data lake architecture. As I write here, there are many accelerators and smart Tools being designed for rapid deployment of data lakes. Following the Bell curve, after a traditional custom data warehouse, we’ve seen the dawn of the prebuilt data warehouse. This is the age of custom data lakes … let’s wait and watch the prebuilt data lake story unfold!